Conversational User Interfaces will revolutionise how your organisation engages with your audience. But only if they are developed with a strong user-centred design process from the outset.

This article:

- Defines conversational user interfaces e.g. voice assistants and chatbots

- Explores how they rose from graphical user interfaces

- Outlines how to design a conversation

Download our Conversational UI Guide if you’d like to understand how to take a user-centred approach to designing and developing your voice assistant or chatbot.

What is a conversational user interface?

Once, graphical user interfaces taught us how to interact with computers via keyboard, mouse or touchscreen. User Experience (UX) Designers’ role was to research, wireframe, design and test interfaces to facilitate this process.

Conversational user interfaces (UI) have transformed this relationship. Powered by artificial intelligence (AI) and language recognition software, humans can now naturally interact with these interfaces via written or spoken language.

It’s exciting because it feels so ‘human’ and intuitive. Unlike computers – which unbelievably, have only been around since the 1970s – we’ve learned via speech and language for up to 100,000 years.

Currently, there’s two types of conversational UI.

- Natural-language interfaces – typically called ‘voice assistants’, these solve specific tasks for users. It includes the Google Assistant, Amazon Alexa, Microsoft’s Cortana and Apple’s Siri.

- Message-based interfaces – typically called ‘chatbots’, the amount these bots can handle depends on the AI it’s powered by.

Of course, some interfaces do combine elements from each other. Apple’s Siri tends to read out the information on a screen; the experiences are mirrored across voice and message. In contrast, the Amazon Echo Show uses the two in partnership. When asking about the weather, for example, the screen displays the day’s highs and lows in temperature while the voice assistant discusses the forecast in a more conversational way.

Through AI, conversational user interfaces can learn from interactions to improve their responses over time. Now, we’ll dive into each conversational interface and the potential benefits for your organisation.

The rise of voice assistants

Sales figures for Amazon and Google indicate how many voice assistants are taking over our homes. Google announced that it had sold more than one Google Home Mini device every second, since launch on October 19th 2017. That amounted to a staggering 7.5 million devices sold in just two months.

The Google Assistant itself is even more widespread. It’s available on 400 million devices, such as Google Home, Google Home Mini, Android and iOS, watches, laptops and even smart TVs. If we allocated just one Google Assistant per household, there would be one present in every home of the G7 (the United States, United Kingdom, Germany, France, Italy, Japan and Canada). Adding Amazon’s sales figures would only increase this.

Voice assistant uptake

We’ve all heard this stat bandied about: 50% of searches will be performed through voice by 2020. In another article, I explore the next generation of searches – and discuss how this is likely an overstatement.

More realistic statistics indicate engagement levels. A recent study indicated how 49% of participants claimed they preferred voice assistants to humans in call centres because they got answers faster. They claimed the conversational interfaces were more convenient than a website, app or even physical stores. Over a third of consumers report that they bought products through their voice assistant.

Your business can get involved by optimising your content for voice search (explained in the article above) or developing a ‘skill’ for Amazon Alexa.

Our Alexa Skill examples – Jim.Care and UX Companion

As an expert voice assistant development agency, Cyber-Duck has developed several apps using the voice AI assistant. An open-source framework that allows us to quickly prototype voice assistants. We can then turn our prototypes into Amazon Alexa Skills that can be published on the AWS Skill store. The same API layer can also power chatbots.

Jim.Care is our most exciting project to date. At Cyber-Duck, we love to tackle real-life challenges that people face by providing user-focused solutions that can make a difference. This time, we were inspired by a simple statistic and a family connection. Over 3.4 million people over 65 suffer a fall that causes serious injury every year.

Our CEO, Danny’s neighbour regularly fell and lay there for hours, with no way to contact friends or family. We built Jim.Care, a conversational user interface that assists with social care. By combining Amazon Dash buttons, Amazon Alexa and PIR sensors, the elderly can connect with carers with the press of a button, no sense of movement or call of a voice. Watch the video below to find out more.

UX Companion is our second example. First released in 2015, UX Companion is a handy glossary of UX terms. It’s intended to help UX designers and marketers refresh their knowledge. It’s done well on iOS and Android devices, amassing over 50,000 downloads and two award wins for best agency marketing campaign.

Our UX Companion in action on the PWA and Amazon Alexa.

But, Cyber-Duck prides itself on accessibility. So, our developers utilised AWS Lambda to turn UX Companion into an Alexa skill. Discover more (and download it for yourself!) here. We’ve continued amassing experience at our Quack Hack by developing a proof of concept holiday companion app that helps you to budget your spending money.

The growth of chatbots

Message-based interfaces, or chatbots have a longer history back to 1966. Joseph Weizenbaum of MIT first built ‘Eliza’, a rudimentary chatbot that could perform 200 responses to users. But it couldn’t be considered as smart.

Advances in AI has transformed the intelligence (and therefore utility) of chatbots. This is critical during the current climate of conversational commerce. Over half of consumers are seeking customer service through social media; but humans can’t answer queries around-the-clock. Chatbots can, and users appreciate it. 69% of consumers prefer communicating with them. You can develop a chatbot via social media (Twitter and Facebook) or your own website.

Chatbot examples

At your latest Quack Hack, we started developing our very own chatbot. It aims to help with user engagement and streamline the user journey within our own website. Read more about the development of Avery and watch a demo in our hackathon blog.

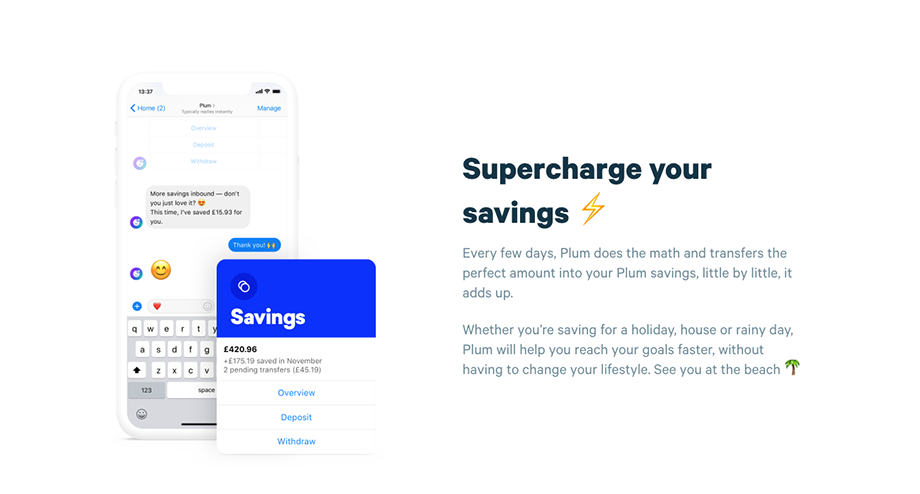

Our favourite example of bots in the wild is Plum, available for Facebook Messenger, iOS and Android. It analyses your transactions to learn about your income/spending; then helps you save and invest in a conversational way. While it’s self-aware – i.e. explains that it’s a bot – it adds humanity via emojis and a helpful personality. Users don’t get swamped as information is ‘chunked’ into bites.

Explore our article on the best conversational user interface examples by sector for more inspiration.

Designing conversations

Designing conversations is a tricky balance. You must try and make them feel ‘real’ and authentic, while providing ways for users to complete tasks with ease.

Following a user-centred design process is the key. Download our UX Handbook to understand the components of this process. You would kick-off with user, stakeholder and competitor research; then, create personas and user stories to sum up who would be using the bot and why.

From here, you can turn towards designing the actual conversations. Three main components make up a conversational interface:

- Intents – the purpose behind a user input

- Entities – what the bot uses to recognise an intent

- Dialog states – how the bot shapes a conversation flow

Restaurant example

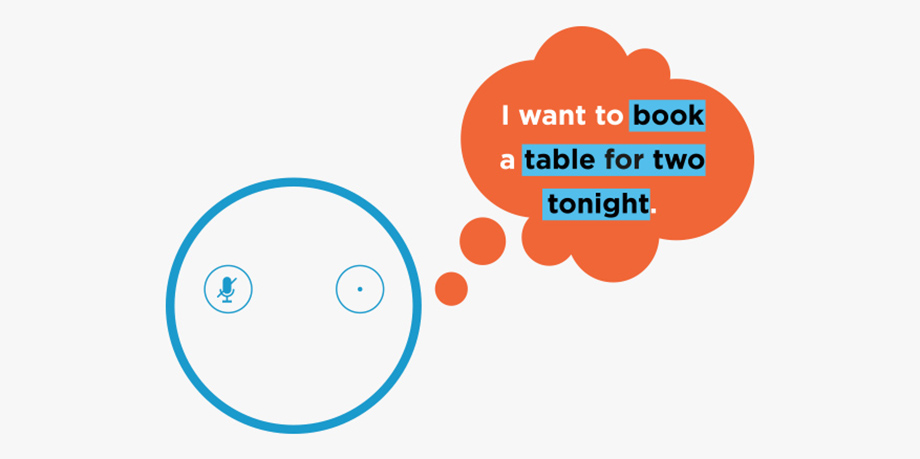

Let’s take the example of booking a table at a restaurant – the intent behind the utterance of ‘I want to book a table for two tonight’.

If a user says, ‘I want to book a table’ to our restaurant’s CUI, the entities here would be ‘book’, ‘table’, ‘for two’ and ‘tonight’. Entities help your bot identify and understand the key pieces of information that indicate an intent. For example, this is all the CUI needs to understand that the user wants to book a table.

Finally, the bot enters into a dialog state with the user. By pushing the conversation forward, the bot can gather more information to complete the intent of a user. For instance, the bot knows the booking is for tonight, but it also needs to know what time it should book the table for.

But it doesn’t end there. Language is complex – there’s an infinite number of ways to ask, with the same intent behind it. Natural Language Processing (NLP) helps chatbots process and understand the context to provide accurate actions or information.

Begin by defining all intents that a bot must identify. Taking our example above, if we were building a restaurant bot the intents could be about booking a table, changing the time of a table, understanding opening hours, the type of food and more.

Then, you need to equip the bot with the information required to identify your intents – the entities. Dialog states need to be scripted so the bot can find out more if it doesn’t know enough already.

It’s important to create a bit of personality in your script. But bear in mind that 48% of consumers prefer chatbots that solve issues; functionality trumps personality.

Error handling

As unexciting as it sounds, planning error handling for your conversational user interface is critical. If it can’t cope with variations in users’ sentences, your conversational interface must have something to say in response.

Design an appropriate answer that’s contextual and helps to move the conversation forwards. It should:

- Clarify what the bot doesn’t understand

- Confirm what the bot is designed for

- Present an engaging call-to-action

- Feed back to the production team for future development

Summary

With these devices’ proliferation, it’s no wonder that 85% of customers will manage their company relationships without getting a human involved by 2020. Overall, businesses can harness conversational user interfaces to generate:

- Information – offer content e.g. FAQs and news stories

- Transaction – complete a task e.g. book a restaurant

- Advice – learn interactions to determine the next steps

Our next blog covers how you can choose a technology for your conversational user interface. Through adopting a voice assistant or chatbot, you can offer users:

- Convenience

- Speed

- Availability

- Accessibility

- Productivity

- Human interaction

80% of large companies plan to implement chatbots over the next two years. Don’t let your business fall behind. Cyber-Duck is here to help; we’ve delivered workshops to plan your bot with UX Crunch, UX Live and Microsoft Azure. We’ve written about chatbots in The Drum. Contact Cyber-Duck today to help you develop your bot.