The more content your website has, the harder it is to keep it up to date. But the biggest struggle isn’t necessarily choosing what content to delete: it’s ensuring you don’t leave any gaps in your site.

Why remove outdated content?

If you think those untouched, unranked, and unvisited pages are harmless, think again. Think of your website as a backpack. If it’s too full, it’ll weigh you down and tire you out. Worse, when you reach in to find something, it can be lost in a sea of other stuff and takes forever to find. Most of the time, you just give up looking for it altogether.

The same thing happens when Google runs a search. Some of the advice out there suggests getting rid of that old content, but it’s never as easy as that. Simply deleting or moving content around affects your website URL structure and metadata. Yes, you want a lighter, more efficient website, but no, you don’t want to confuse your users and give them a reason to leave.

To help you keep things clean and well-organised, we’ve compiled a list of 11 things to consider before removing outdated content from your website:

-

Page traffic – what to keep and what to delete

-

Using redirects

-

Custom 404 pages

-

Internal links and site architecture

-

Check breadcrumbs

-

Update the HTML Sitemap

-

Update the XML Sitemap

-

Review Robot.txt File

-

“No index” the page

-

Set rules for future content

-

Test, test, and test again

1. Page traffic – what to keep and what to delete

The two first (and most obvious) things to consider are the amount and quality of traffic a page is getting. Google Analytics is a good place for that, and, if both are low, the decision to remove the content can be pretty clear.

But then there are user experience questions you need to ask yourself, such as:

-

Does it satisfy a user need, but traffic is low because they can’t find it?

-

Does this page rank in a keyword search?

-

Is there already a more updated version of this page?

If the answer is no to all of those questions, the decision is once again obvious. If there’s a yes or two, however, things can be a little trickier. You may find yourself comparing two similar articles to figure out which one makes the cut. Or you might realise that a page satisfies a user need but isn’t easy to find or optimised for search. Then you might decide to keep the page and address those issues instead.

This can take time and expertise, and, depending on the size of your website, might call for a full content audit.

A content audit allows you to assess the entirety of your website’s content. It can be a heavy lift, but the insight is extremely valuable, so it’s often outsourced to a trusted third party. After the audit, your partners will recommend which pages to keep, update, and get rid of altogether.

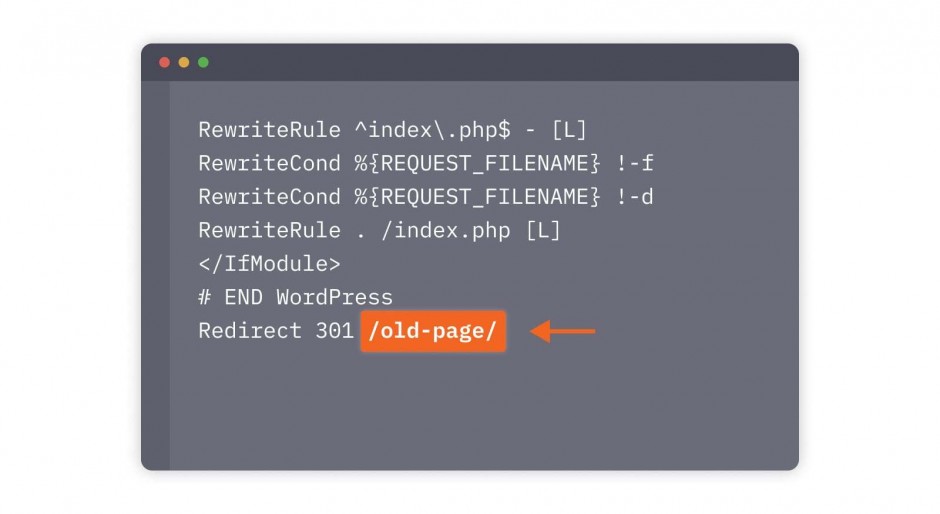

2. Using redirects

Use 301 redirects when removing content from website

Deleting a page can disrupt your inbound links, leaving visitors with the dreaded “404 Page Not Found” page. This is where a 301 Redirect comes in.

As Laura Devonald from our friends Zest Digital told me, “It’s better to update a link to go to the most relevant page on your website than to lose the link completely. It stops visitors from closing the tab because they’ve reached a dead-end. Instead, send them to the next best option to keep them on your website longer.”

This doesn’t mean you should simply create a redirect for all your pages. As with everything else in your removal process, you’ll want to make sure the page is getting traffic, has backlinks, and/or is highly ranked for targeted keywords. If it has one or more of these, then consider the redirect. If not, it might be better to simply delete the page altogether and return a 410 (permanently deleted) error.

3. Custom 404 pages

If you’re going to get rid of a page and a redirect isn’t an option, the next best thing to consider is a customised, user-friendly 404 page.

Again, a 404 page is what users see when they try to reach a non-existent page. The idea behind customising that page is to both showcase your brand’s personality and to be helpful. For example, you could include a fun meme and/or use CTAs to send visitors to your most-read content, an FAQ section, or a product page. Optinmonster have compiled some great examples.

4. Internal links and site architecture

Update internal links when removing content from website

Removing pages – and therefore internal links – from your website can disrupt its architecture. That’s why, after redirects and custom 404 pages, you’ll want to review your website for any internal links that would bring users to the deleted pages. Then you can either get rid of the internal link altogether or you update the broken link and send the user directly to the most relevant content on your website.

Try to avoid blindly implementing 301 redirects as a solution for fixing internal broken links, as this negatively impacts your website page speed and website crawl budget, which isn’t good for the UX and SEO of your website.

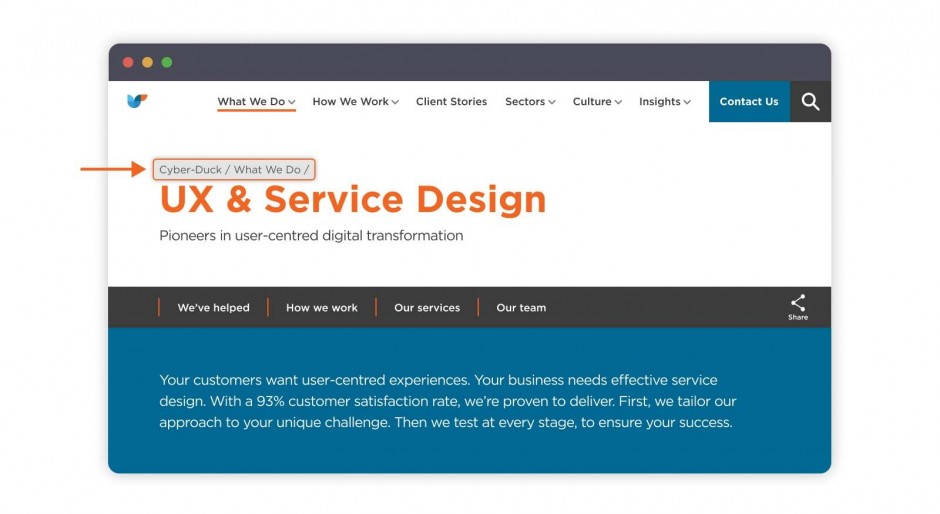

5. Check breadcrumbs

Update breadcrumbs when removing content from website

This is connected to reviewing your internal links. If you've set up a trail of breadcrumbs for users to follow, you have to ensure that you haven't left any gaps in the path.

Breadcrumbs are the secondary navigation trail at the top of a page that allows a consumer to backtrack. When you have many pages on your site, breadcrumbs become extremely useful. However, removing a page risks breaking the trail.

A missing breadcrumb should be replaced, or the breadcrumbs on either side should be bridged together.

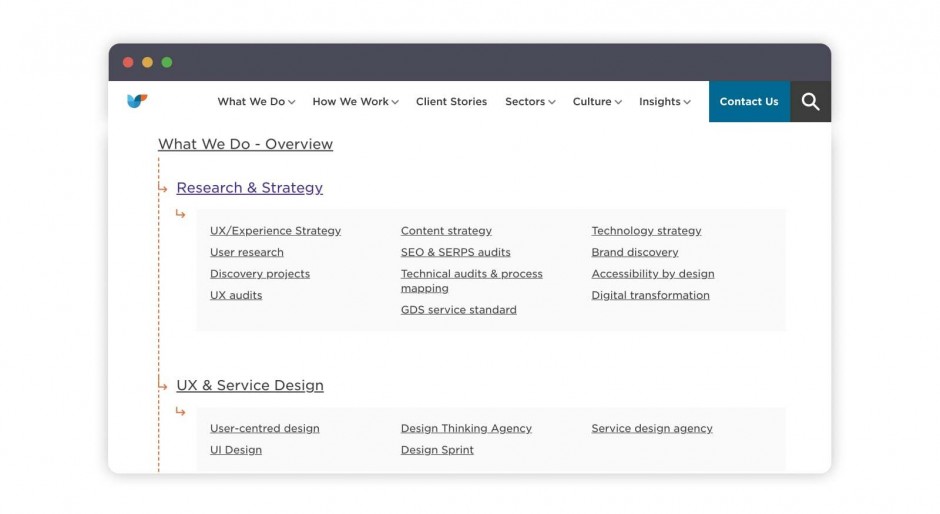

6. Update the HTML sitemap

Update HTML sitemap when removing content from website

If you don’t have an HTML sitemap, you should really think about implementing one. If you do and you’re removing content from your website, you’ll need to update your HTML sitemap so that it no longer includes any deleted pages. HTML sitemaps are easy to use, and you can use the eval function of JavaScript when making edits.

It should be easy for you to access the HTML sitemap and make minor changes, even if you outsourced your website's initial design and development. If, however, you outsourced and are making significant changes, you may want to contact the developer to ask for assistance. Your HTML sitemap needs to stay up to date because it helps visitors to trace content on your site.

7. Update the XML sitemap

Update XML sitemap when removing content from website

Search engines use your XML sitemap to find and index pages. If they have indexed a page that no longer exists, you’ll be sending your customers right to that dreaded dead-end page.

An XML sitemap is case sensitive, requires closing tags, and doesn't tolerate coding errors. The person editing the sitemap also needs to have a fundamental understanding of other technologies such as XPath, XML Schema, DOM, and more.

Unless you’re familiar with XML, seek advice from someone experienced with the coding platform to ensure you don't remove the wrong thing.

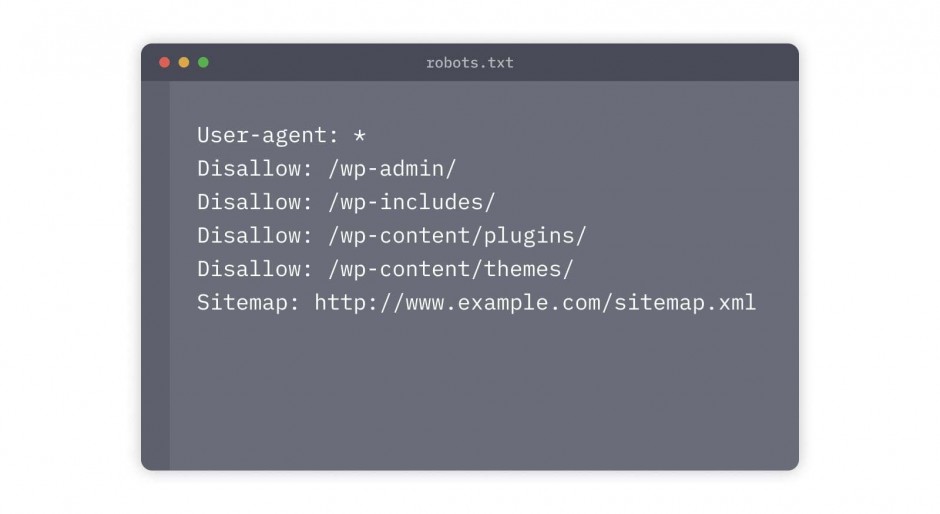

8. Review robots.txt file

Update robots.txt when removing content from website

Your robots.txt file tells search engines which pages or files the crawler can or can't request from your site. Take the time to go over your robots.txt file and remove any reference to content you have removed.

You can update your robots.txt file through any text editor, and each site has only one file. Within this file, you can comb through and get rid of any indexes that refer to your deleted content. This will help keep search engines from pulling up the deleted page.

Although robots.txt is used to avoid overloading your website with requests, it will not block search engines from finding pages that still exist. If you're looking to hide a page, you have to use a no-index tag.

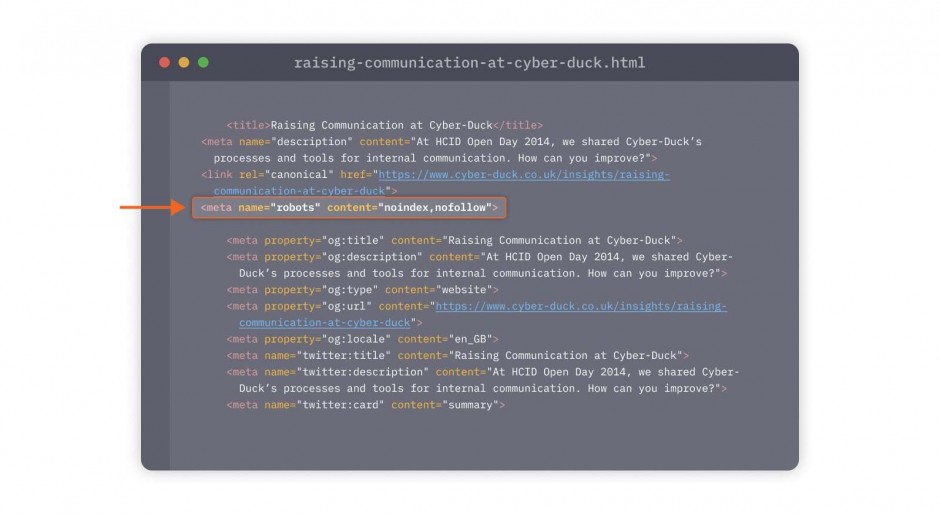

9. "No Index" the page

Using No Index tag to prevent search engines from displaying your web page

Instead of completely deleting the page, you can tell search engines to ignore it by adding a “noindex” meta tag through your content system or a plugin. This will add the code to your page.

It’s important you know the difference between blocking content via a robots.txt file and removing content from the Google index with a noindex tag:

-

txt file: Prevents search engines from crawling a page or a section of your website. This helps to improve your website’s crawl budget if these pages are low or no value. It’s important to know that the robots.txt file will only tell search engines not to crawl an area of your website, but this content could still be found and indexed within a search engine results page, for example if an external website linked to a page that was blocked in your robots.txt file, then this page would still be displayed in search engine results.

-

No index tag: This tag specifically tells search engines that you do not want this content to be displayed in their search results. This content will therefore never be found via a search engine.

10. Set rules for future content

After all that work, you don’t want to find yourself going through the same exercise a few months from now. Taking the time to declutter can be taxing, so do what you can to prevent having to comb through your site more often than you need to.

Camilla West of Scroll reminds us that everything you publish should have a user need. “Your company should have a set process for writing, editing, proofing, and publishing to standardise your content,” she says. Camilla also advises deciding early on when you plan to review published content, to ensure it's still relevant.

11. Test, test, and test again

Amber Vellacott of Sleeping Giant Media says it best: When you think you're done, run as many tests as possible to ensure you didn't miss anything. “Checking will ensure they're still working, and not double counting,” she says. Tools such as Google Analytics, Facebook Pixel, and Google Tag Manager can help you cover all of your bases.

And with all that, your site should now be ready to go! Well, theoretically…

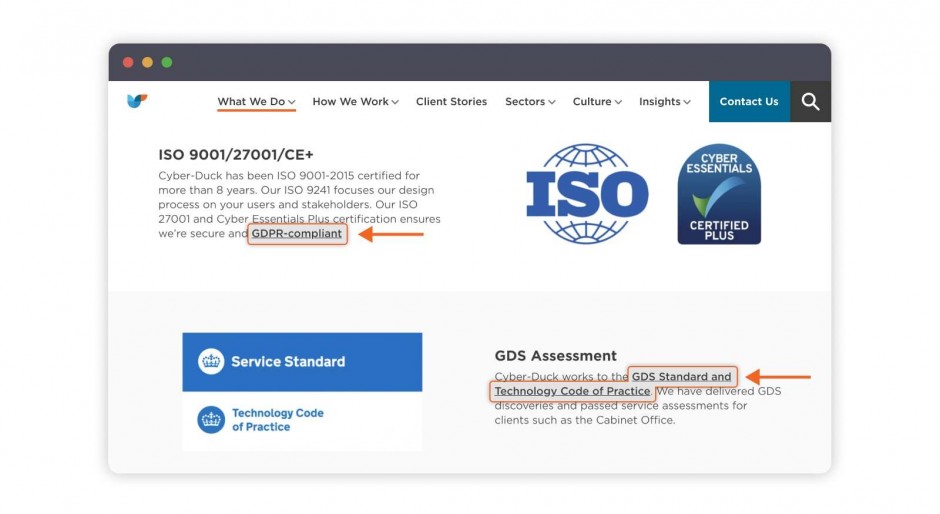

At Cyber-Duck, we understand that updating your site can be a more time-consuming task than expected. And although we don’t have a magic button, we do have the content management expertise to make sure your website is nothing less than stellar.

Contact us today to chat through your content challenge – we’re ready to help. We can also offer a free UX audit to identify where you can make improvements.